Is Your Internet Privacy at Risk? Shocking New Study Reveals 85% of Users Say YES!

Should verified identities become the standard online? Australia’s social media ban for under-16s shows why the question matters.

14 Jan 2026

•

5 min. read

New legislation in Australia has made it illegal for individuals under the age of 16 to maintain social media accounts. To comply with this law and avoid financial penalties, social media companies are racing to remove accounts they believe violate this mandate. However, there are no repercussions for minors who attempt to create accounts with fraudulent ages. As the first country to implement such a ban, Australia is now under scrutiny to evaluate the effectiveness of this legislation and whether it achieves its intended outcomes.

As we step into 2026, this situation raises a broader question: is this the year when the global community reevaluates the concept of identity online?

Rethinking Online Identity

While Australia's new regulations stem from legitimate concerns regarding the risks children face on social media platforms, banning access altogether may not be the most effective solution. The underlying issues persist. Once a user turns 16, are they suddenly deemed acceptable targets for harmful content? All internet users deserve protection from abusive interactions and toxic environments. Historical precedents suggest that banning access can increase demand; I recall when radio stations banned “Relax” by Frankie Goes to Hollywood—this only heightened its popularity, keeping it at number one longer. Similarly, prohibiting social media access could drive under-16s to seek out alternative platforms, potentially exposing them to even greater risks.

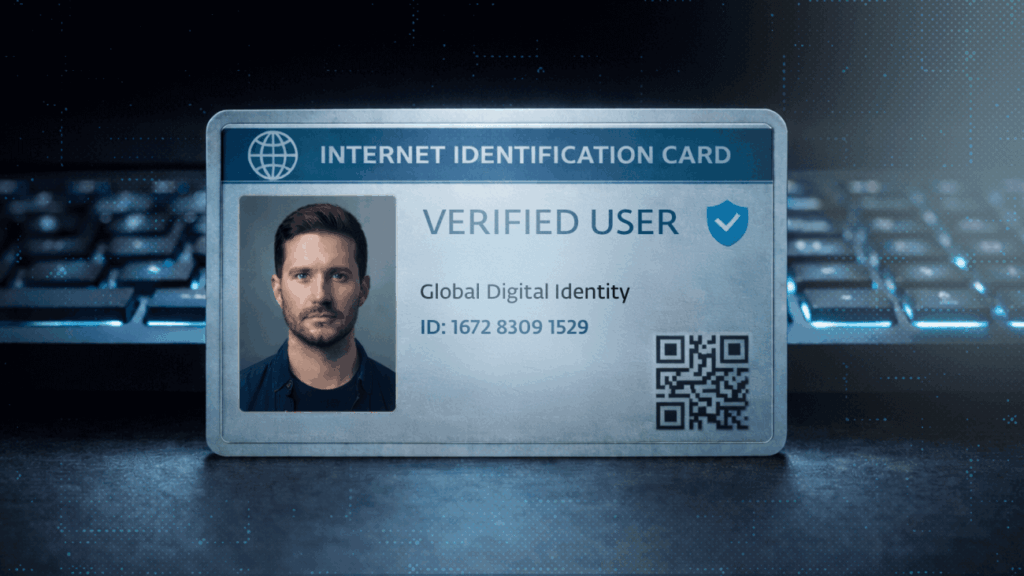

Other countries, including some U.S. states, are also pursuing age-verification legislation targeting adult content, leading to the development of various age-verification technologies. Some employ real-time age assessments based on facial recognition, while others depend on government-issued identification or financial documents. Each of these methods raises concerns about privacy, data collection, and storage.

The situation is further complicated by the prevalence of phishing scams, romance frauds, and other forms of cybercrime, prompting us to question whether the current frameworks governing internet services are indeed fit for purpose.

Imagine explaining to someone three decades ago that a small device would someday allow them to connect with anyone, shop, and stream content—all while also facilitating bullying and anonymous abuse. If we had foreseen these negative aspects, many might have reconsidered the adoption of such technology.

The Reality of Online Abuse

There’s a common misconception that abusive behavior online originates from distant places or anonymous sources. However, a recent investigation by the BBC revealed that, in just one weekend, there were over 2,000 extremely abusive social media posts aimed at managers and players in the Premier League and Women’s Super League. Some of these posts were so egregious that they included threats of death and sexual violence. Identifying the individuals behind these posts is nearly impossible, given that most social media accounts do not require formal identification, and the use of VPNs complicates tracking even further.

As it currently stands, users can create accounts on many platforms using any identity they choose, provided the service does not require identity verification. This capability for anonymity has long been a fundamental aspect of online freedom, though whether it was intentionally designed this way is debatable. The fear is that requiring verified identities could lead to a decline in user numbers as companies might face friction during account creation—a significant concern for platforms that rely on user engagement for advertising revenue.

This brings us to the pivotal question: should we accept a system where the internet distinguishes between verified and non-verified individuals? While I’m not advocating for identity verification on all platforms, having the option to filter out content from non-verified users could drastically improve the online experience. For instance, Premier League players could engage on social media without being subjected to a deluge of abuse. Moreover, if a verified user were to issue threats, law enforcement could more easily take appropriate actions.

The benefits could extend beyond social media. Currently, my email inbox allows me to separate general correspondence from actionable messages. Introducing a third category for unverified senders could bolster security against targeted attacks, although cybercriminals could still hijack verified accounts, indicating that verification is not a foolproof safeguard but rather an additional layer of protection.

It is crucial to note that identity verification doesn’t necessarily mean sacrificing anonymity. For example, a dating platform could verify that each subscriber is a real person while allowing them to adopt any profile identity they choose. The real advantage comes from knowing that all users have undergone verification, making it easier to hold individuals accountable for any abusive or fraudulent behavior.

Transitioning to a system where verified identities are the norm would represent a significant shift in the current status quo. While claims about restrictions on freedom of speech would undoubtedly arise, the reality is that verified identities would not silence voices or limit expression; rather, they would empower users to filter out noise and abuse from unverified individuals. It’s clear that the current measures to limit content by age are failing to adequately address the issue of harmful or illegal content. Furthermore, banning minors from social media may only drive them underground or prompt them to circumvent restrictions, arguably creating a more dangerous environment rather than mitigating risks.

You might also like: